Opinion

Ana Maraloi

8

min read

5 Dec 2025

Artificial Intelligence - also known as AI. Plaguing everything from news articles headlines, to business investments and that suspiciously well-written essay you submitted despite not going to any of the lectures (don’t lie, we all know you’ve done it at least once). There is no doubt that we are living in a new age. The era of chat.

Whilst the rest of us are busy asking ChatGPT to ‘make this text sound academic but not AI generated’, somewhere in Stockholm a politician is probably doing the same. Picture Ulf Kristersson hunched over his computer, typing: “Dear ChatGPT, please fix Sweden.”

Earlier this month, in an interview with Swedish Financial newspaper Dagens Industri, Sweden’s prime minister Ulf Kristersson talked about his usage of the French and American Artificial Intelligence (AI) chatbots ChatGPT and LeChat respectfully.1 He mentioned how he often uses it ‘to get a second opinion’ or to inquire about what ‘others would have done’ . This has sparked questions about the continuing rise of the AI bubble and how it reshapes our existing power structures with those supposedly making decisions on our behalf.

Kristersson was soon met with criticism from Swedish newspaper Aftonbladet, who questioned why Kristersson would go to a Chatbot instead of his large, well-paid staff of experts. There are also talks of safety concerns, as Kristersson mentions that he takes little to no security precautions when using the Chatbot, meaning that there is potential risk of the Swedish Prime Minister’s data being stored in American servers.

However, Ulf is not the only Swedish politician fond of using AI. Ebba Busch, leader of the Christian Democrats party was caught using fake AI-generated references during her speech at Almedalen Week.2 The Almedalen Week is an annual political and social forum held in Visby on the island of Gotland. It is a major event where politicians, journalists, business leaders and the public gather for a week of free and open discussions on a wide range of societal issues.

On the surface, this may not seem significant. After all, other political parties such as AfD in Germany have much more interesting uses of Generative AI in their political campaigns. But maybe that’s what makes the Swedish case so Swedish. The Swedes embody jantelagen even in their politics - not priding themselves in deepfakes or political drama, but the quiet normalization of AI in politics.

Is this hiccup in Swedish governance a system error? Or, if we look a bit more closely, an invitation? Because if even the country that built the world’s most transparent democracy can’t fully control how its leaders use AI, what does that mean for the rest of us, the students preparing to lead in an ‘I summarized this whole lecture using ChatGPT’ age?

The Art of Rhetoric

What even is rhetoric? At its core, rhetoric is the art of expressing oneself persuasively – a skill politicians, CEOs, and that one overly enthusiastic group project leader all try to master. It was first developed by Aristotle during the fourth century BC, creating The Rhetorical Triangle, which explains the three elements to make a speech appeal to an audience.

We first have ethos, the Greek term for ethics. Ethos represents the person’s character. Ethos is established through a variety of factors such as status, awareness and professionalism. Building ethos helps your audience trust what you are saying.

We then have pathos, the Greek term for emotion. This represents how an audience feels or experiences a message. Pathos works in a speech by appealing to an audience’s emotions – using techniques such as storytelling, vivid language, and personal anecdotes.

Finally we have logos, the Greek term for logic. Ethos represents the facts and research that provide proof and evidence to a claim. Being able to provide the mechanisms for your reasonings helps convince an audience that your argument is well structured and otherwise worth their time.

Rhetoric plays a crucial role in politics because persuasion is the core of political action and communication. But what role does AI play in this exactly? Let’s find out for ourselves, shall we?

We can see that ChatGPT has thrown a bunch of metaphors around in hopes of being able to sound inspirational. We can also see that this speech is so meticulously structured that it doesn’t even represent the human spontaneity in speech. Sure, you could argue that I didn’t

give Chat a detailed enough prompt – but no matter how specific the prompt, the bot will never quite feel the struggle behind the words. There’s only so much pathos an algorithm can fake before humanity runs out of data. And that’s what an audience picks on the most.

Rhetoric is fluid. Whether you’re a prime minister or stressfully applying to your hundredth internship of the season, rhetoric isn’t about perfection but rather authenticity; the cracks, the glitches, the human touch is what makes a message memorable.

System Error: Cracks in the idealized ‘lagom’ system

We’ve often looked at AI from a satirical point of view, but what are the underlying effects of implementing AI systems in daily practice? Is there a darker side to Kristersson and Busch simply getting ‘second opinions’ on their work?

Sweden’s Social Security Agency Försäkringskassan has been using AI systems in its system to flag individuals for benefit fraud inspections since 2013.3 However, an investigation by Svenska Dagbladet in 2024 found that the system unjustly flagged marginalized groups for further fraud inspections. This included factors such as: gender, education level, income level and ethnicity. This is significant because fraud investigators have a high degree of power. They can go through someone’s social media accounts, obtain private data from schools and banks. This violates European data protection regulation, because the authority has no legal basis for profiling people.

Are these random checks ‘glitches’ or do they represent an underlying bias? The response of an AI model is a result of who trains them. Even systems that are designed to be neutral fundamentally represent the data they’re fed. Those who live in a certain neighbourhood may be flagged, why is that? These deciding factors don’t just coincidentally appear, they reflect the same structural prejudices of the societies that create them. In a country where immigration and societal integration dominates political discussion, the ‘objectivity’ of the algorithm serves to reinforce narratives instead of challenging them.

Once bias enters the system, it rewrites the narrative of who can be trusted and who is being watched. It then starts to create a pattern of who ‘fits’ and who doesn’t. When Jimmie Åkesson proposed offering unemployed immigrants 350,000 SEK to leave Sweden4, it wasn’t just a political statement, but reflecting a broader algorithmic ‘trend’. If politicians start to formulate policies and internalize narratives built on evidence from a so-called ‘neutral’ algorithm, we start to blur the line between objective analysis and automated assumption.

A Wider Trend: Populist Rhetoric & Global Context

What if AI isn’t just making politics just transactional? We’ve seen a rapid rise in the use of generative AI in political campaigns worldwide. But what is it about these tools that captured the attention of citizens in a way politicians were not able to do before?

At its core, generative AI is used because it can craft emotionally resonant content quickly, capturing the audience’s attention as described by pathos.

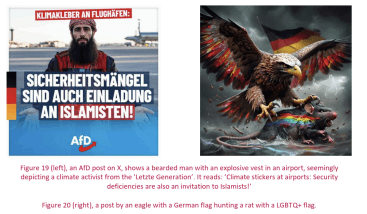

Let’s analyze the AfD party’s use of Generative AI:

The imagery shows symbolic messaging because it combines familiar national symbols with culturally charged elements in ways that grab attention. Even in a country like Germany, known for being LGBT-friendly, the image is designed to provoke emotion. It’s the kind of image that makes you blink twice before mindlessly scrolling onto the next reel. In this case, AI enables the testing of visuals that maximize emotional impact, making abstract political impacts (like ‘saving Germany’) more immediate and memorable.

Through this populist appeal, governments are able to gain democratic legitimacy. Governments and political parties (in democracies) seek legitimacy via support from the people, not just institutional authority. AI messages are able to be tailored to resonate with audiences who perhaps were not previously engaged in politics, making policy and ideology easier to understand and emotionally engaging. This emotional resonance amplifies a perceived alignment between citizens and leaders. As a result, this provides an optimization metric for persuasion. AI is able to generate a feedback loop that ensures these campaigns stay persuasive in such a media-saturated environment. Think of it like your personalized Instagram-reels feed, but on steroids.

The system error lies in this systematic mismatch of the way governments are choosing to gain democratic legitimacy - through AI campaigns rather than accountability and transparency. Whilst AI-crafted messaging can reach groups that were previously less politically active (eg: lower-income or working class citizens), this engagement may be on a surface level: responding to perception rather than substance.

Populism isn’t inherently bad - it can get people who usually scroll past politics to actually pay attention. The system error we’ve explored isn’t static; it’s a tension that builds upon how our leaders, institutions, and citizens continue to navigate, and how AI tools reshape how messages reach us. With this power comes a responsibility: to ensure that persuasion doesn’t replace substance, and that authenticity remains at the heart of communication.

Sources

https://www.dn.se/sverige/forskare-ifragasatter-kristerssons-ai-anvandning-rostade-inte-pa-chat-gpt/2 https://www.aftonbladet.se/nyheter/a/gw8Oj9/ebba-busch-anvande-falskt-ai-citat-ber-om-ursakt

https://www.amnesty.org/en/latest/news/2024/11/sweden-authorities-must-discontinue-discriminatory ai-systems-used-by-welfare-agency/

https://www.svt.se/nyheter/inrikes/sd-vill-fa-arbetslosa-att-lamna-sverige-med-hojt-bidrag

https://www.isdglobal.org/wp-content/uploads/2025/02/The-use-of-generative-AI-by-the-German-Far Right.pdf